The maintenance phase of a data pipeline refers to the ongoing activities that are necessary to ensure that the pipeline is running smoothly and efficiently.

The data processing phase is a stage in a data pipeline that involves performing operations on the data to extract insights or generate results.

Data visualization and reporting are important elements of a data pipeline, as they allow users to understand and make sense of the data being collected and processed.

The conformed zone of an enterprise data lake, data validation is typically performed to ensure the quality and consistency of the data.

Curated data refers to data that has been carefully selected, organized, and annotated to be useful for a specific purpose.

The choice of destination system for storing and processing transformed data will depend on the specific requirements of the analysis, training, or visualization.

Data Cleansing The cleansing phase, also known as the data cleaning or data preprocessing phase, is an important step in […]

The data extraction stage in a data pipeline is the first step in the process of transferring data from one or more sources to a target system. It involves extracting data from the source system and preparing it for further processing.

An enterprise data lake is a centralized repository that allows businesses to store all their structured and unstructured data at any scale. It is designed to provide a single source of truth for data across an organization and enable data-driven decision-making.

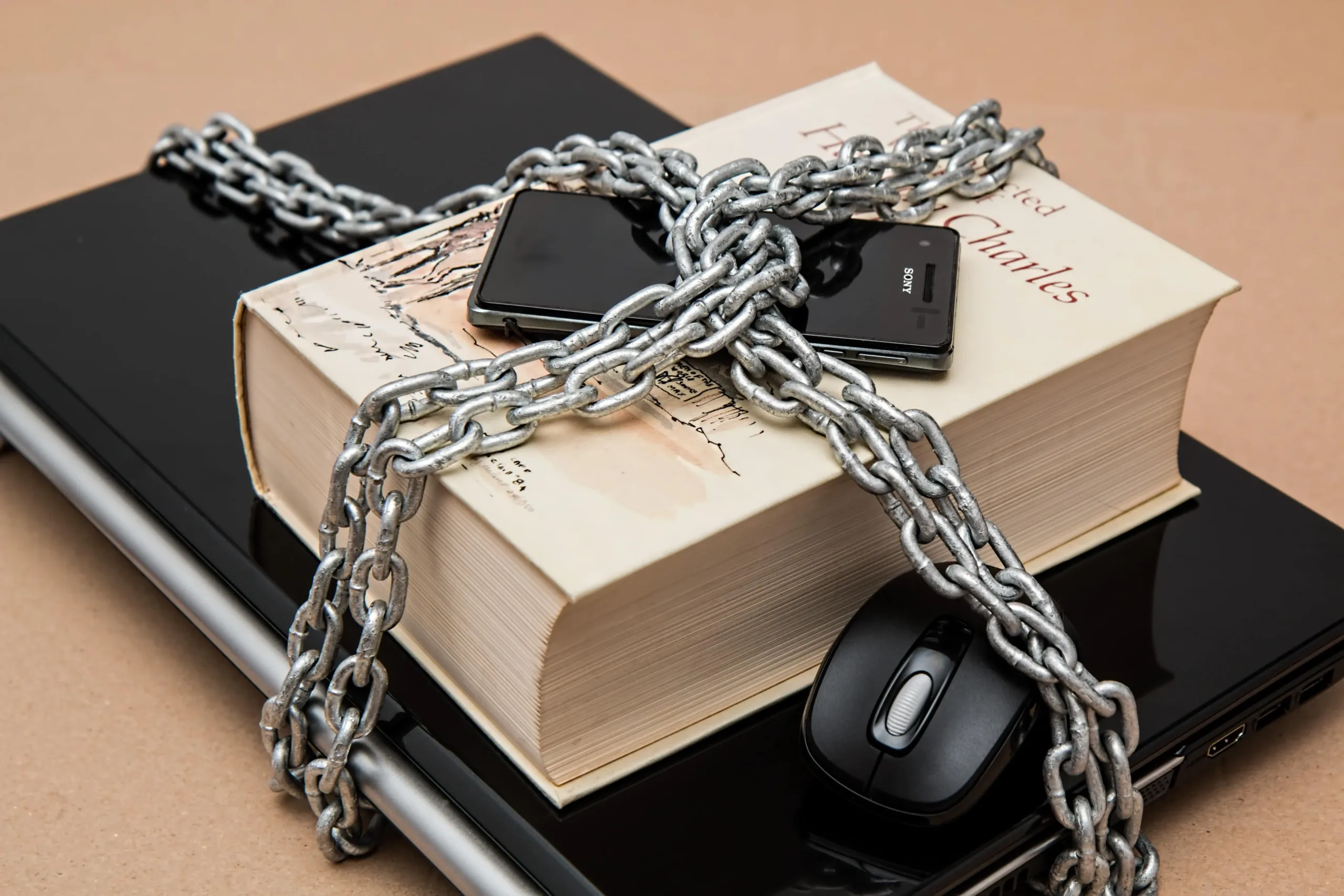

security is an important consideration when implementing a data pipeline. Ensuring the confidentiality, integrity, and availability of the data being transmitted and stored is critical to the success of the data pipeline.

Data pipeline implementation may vary. It depends on the type and speed of the data. Also the frequency of its use. This leads to a large number of permutations of type, speed, and frequency. So, there are a lot of possible data pipeline solutions.

A data pipeline is a key part of any organization's data infrastructure. It helps to automate the process of moving data from its sources to the systems and tools that need it. This allows their more efficient use with lower risk of error.